GitHub - AGI-Edgerunners/LLM-Adapters: Code for our EMNLP 2023 Paper: "LLM- Adapters: An Adapter Family for Parameter-Efficient Fine-Tuning of Large Language Models"

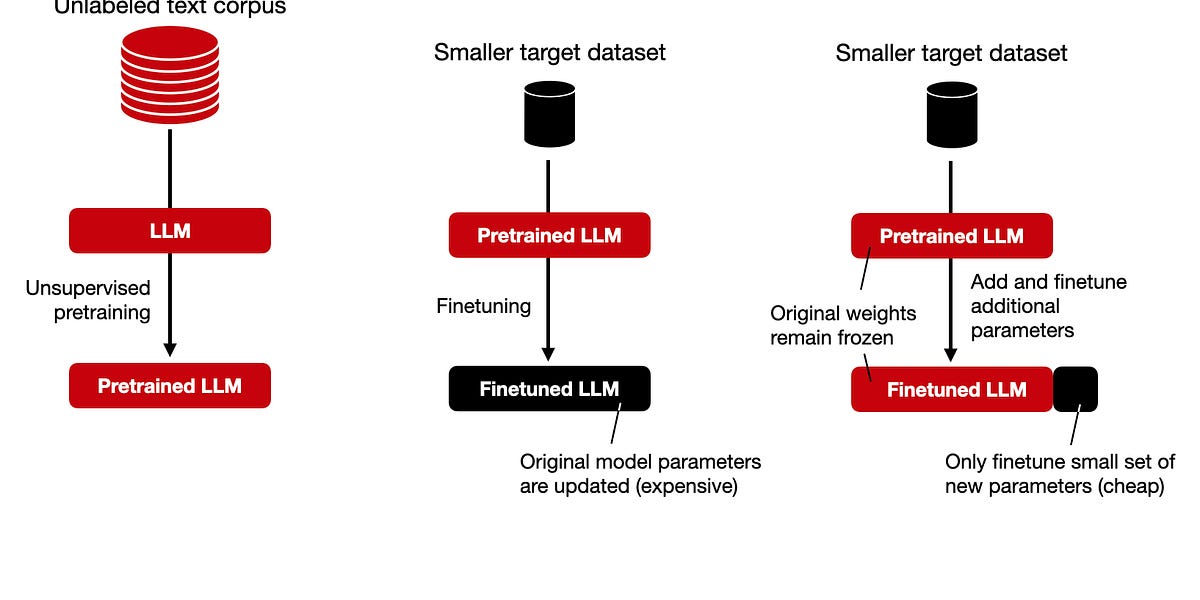

OpenAI: How to fine-tune LLMs with one or more adapters. | Damien Benveniste, PhD posted on the topic | LinkedIn

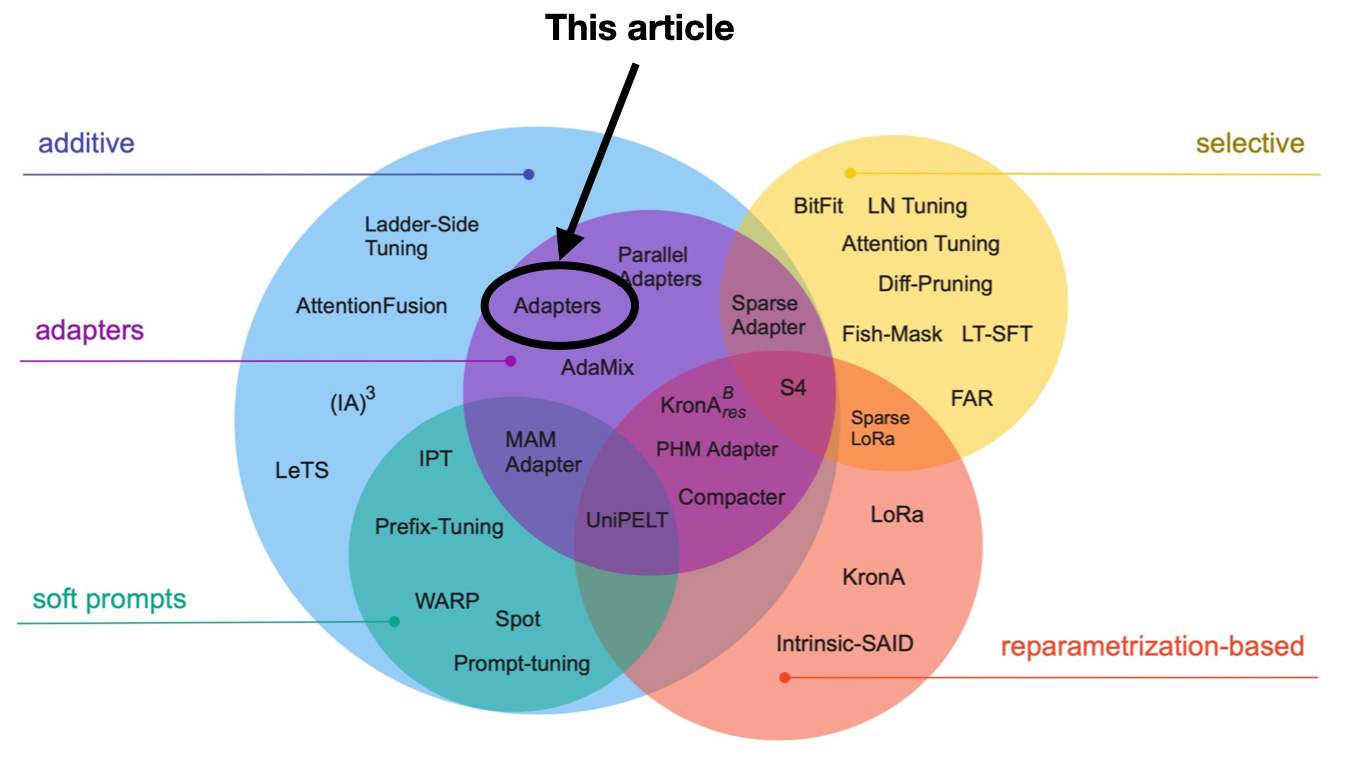

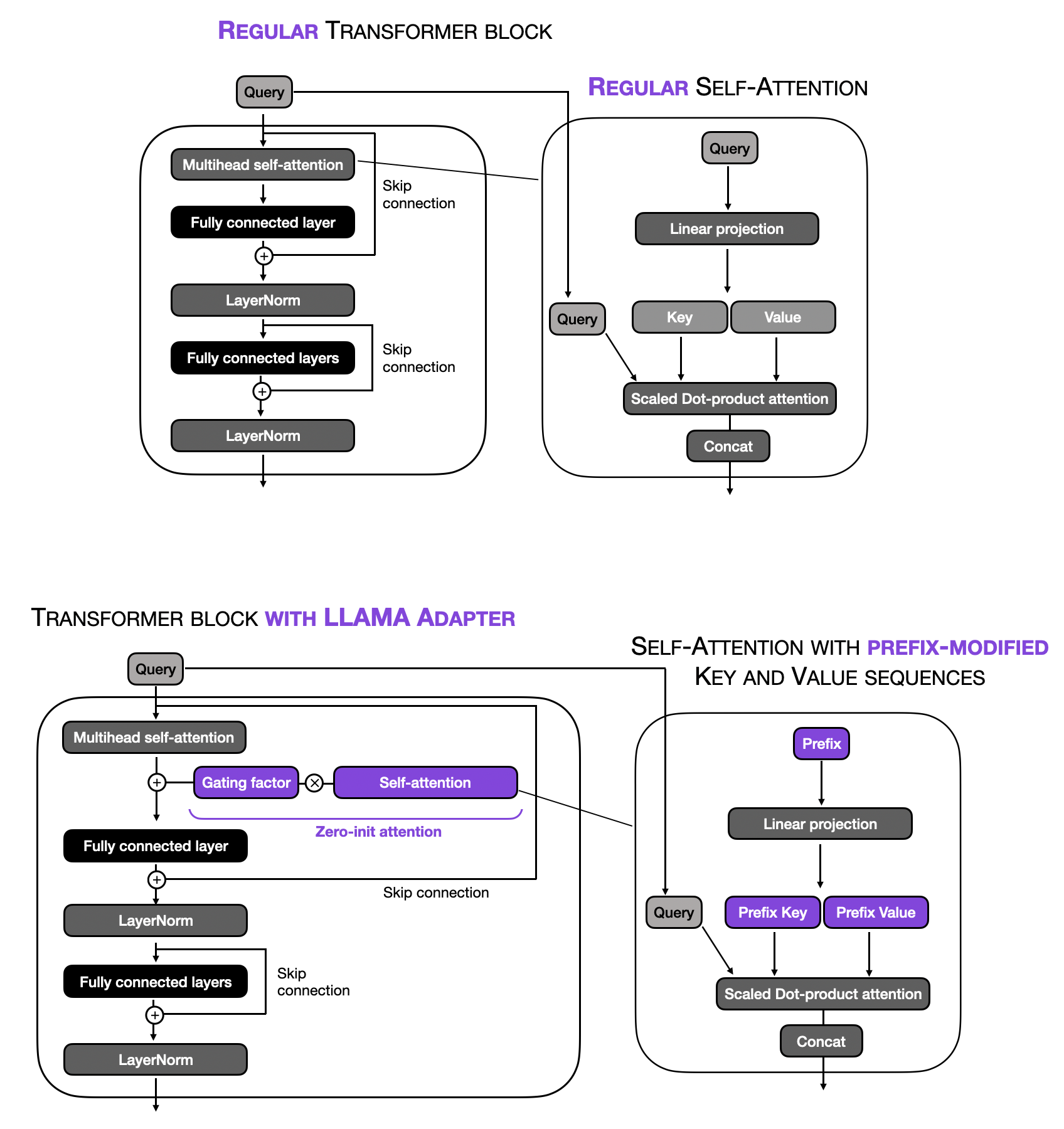

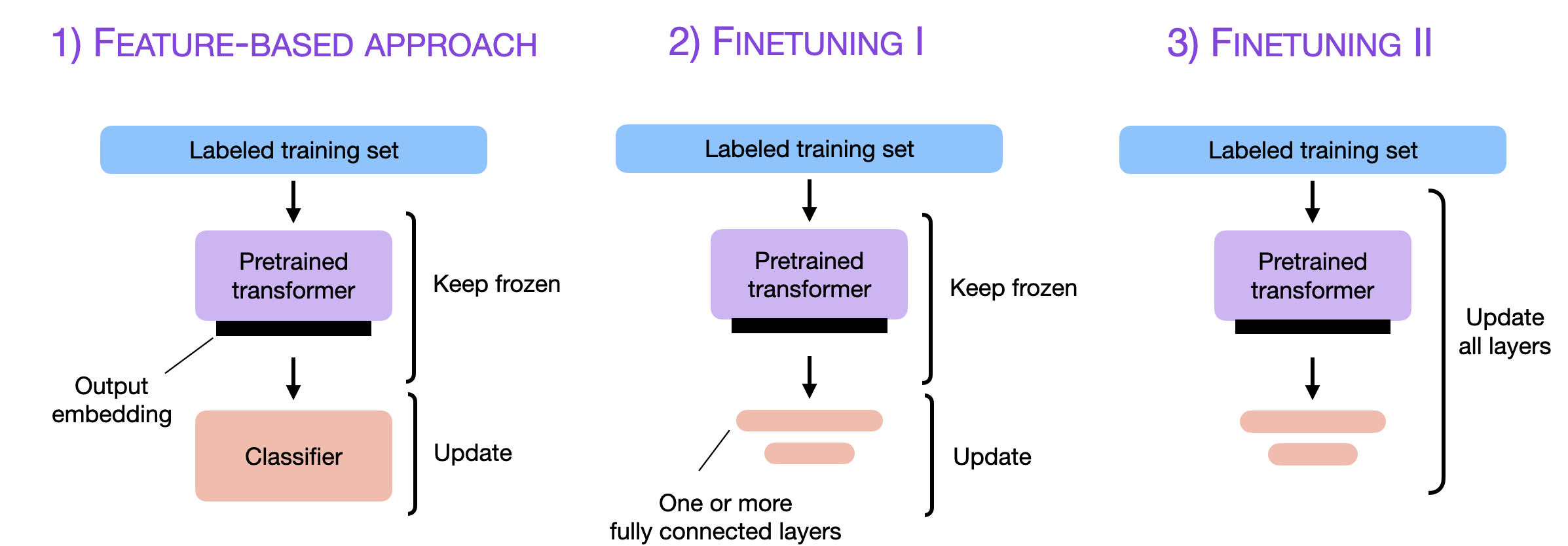

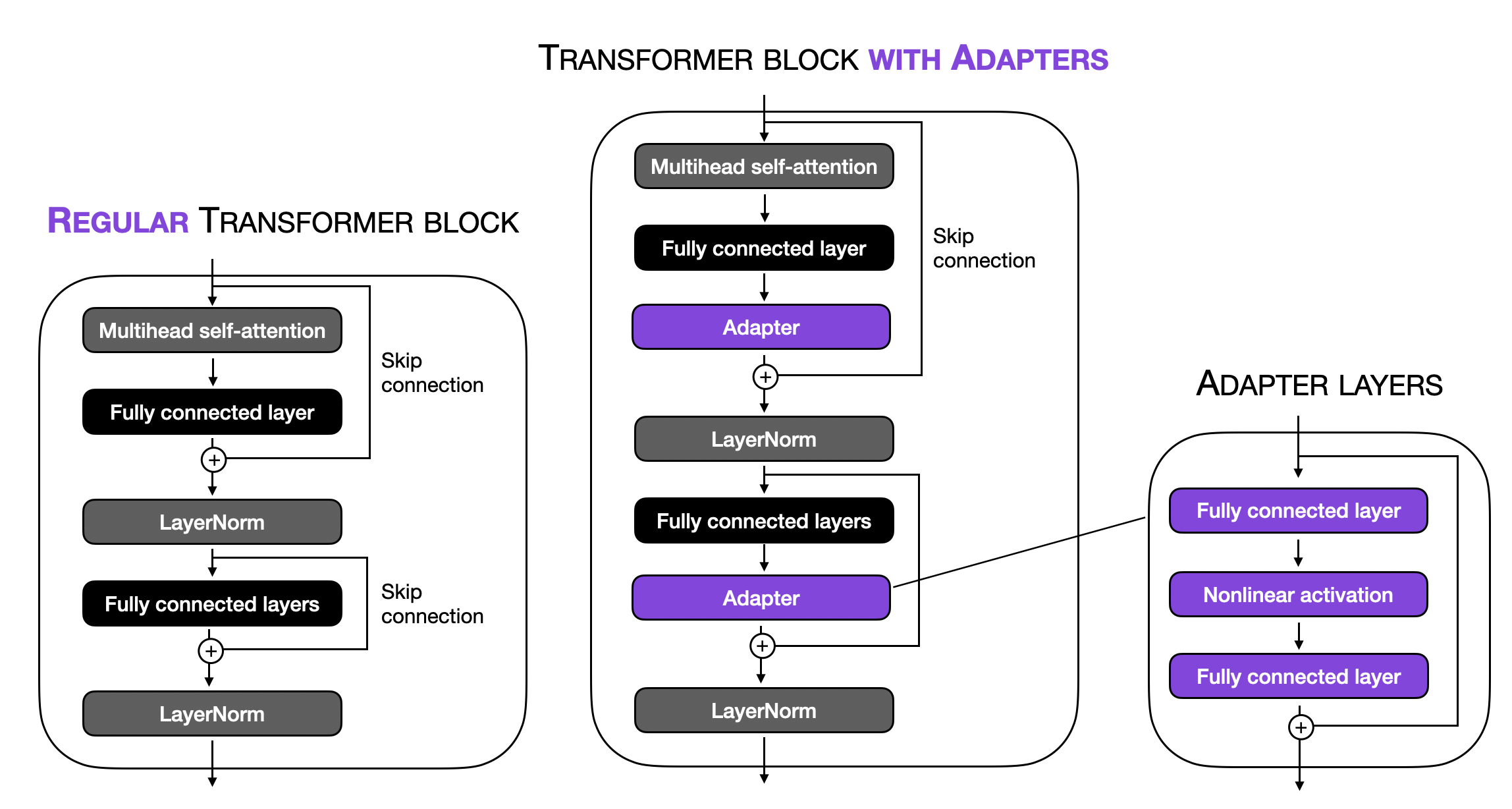

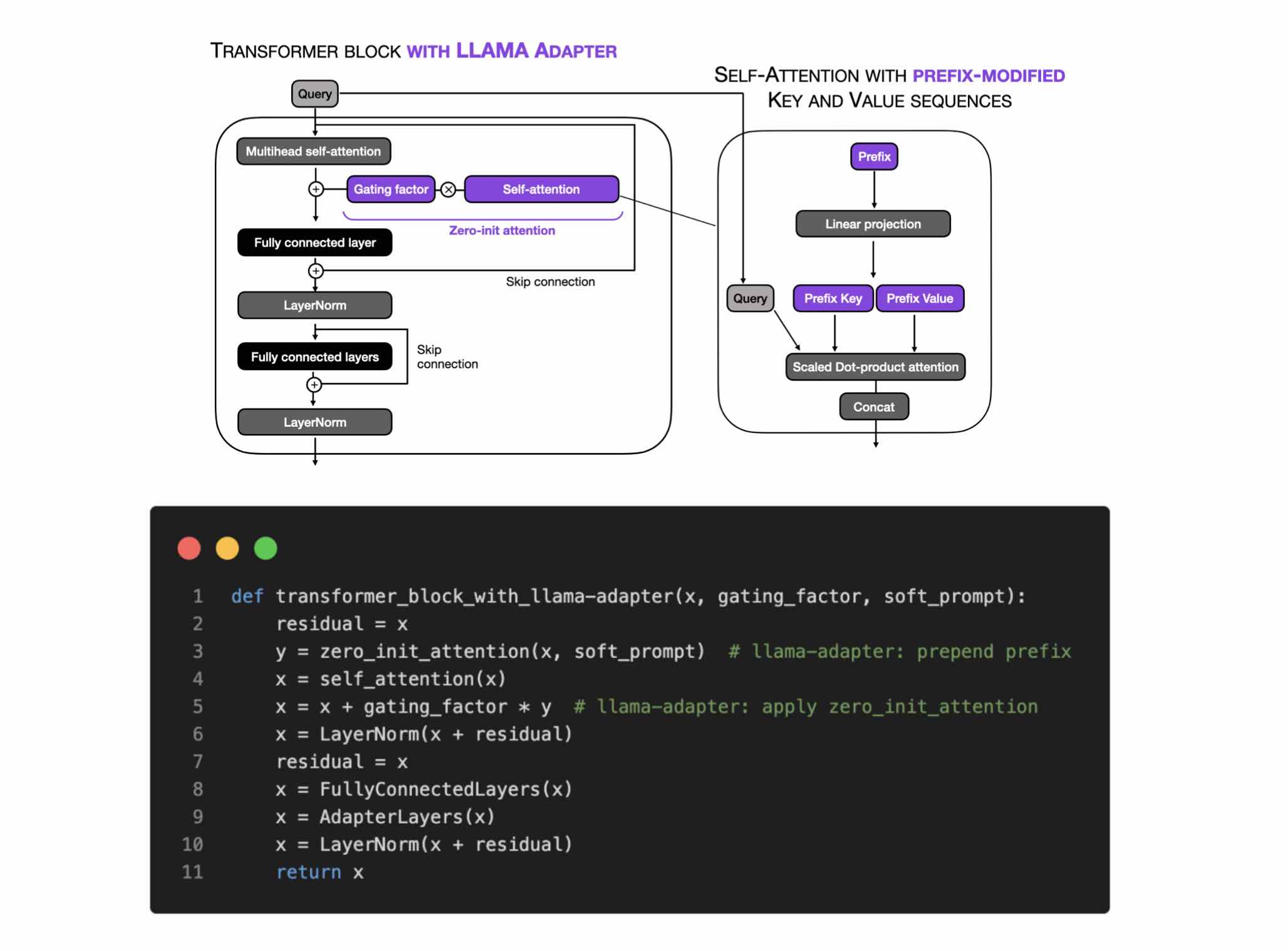

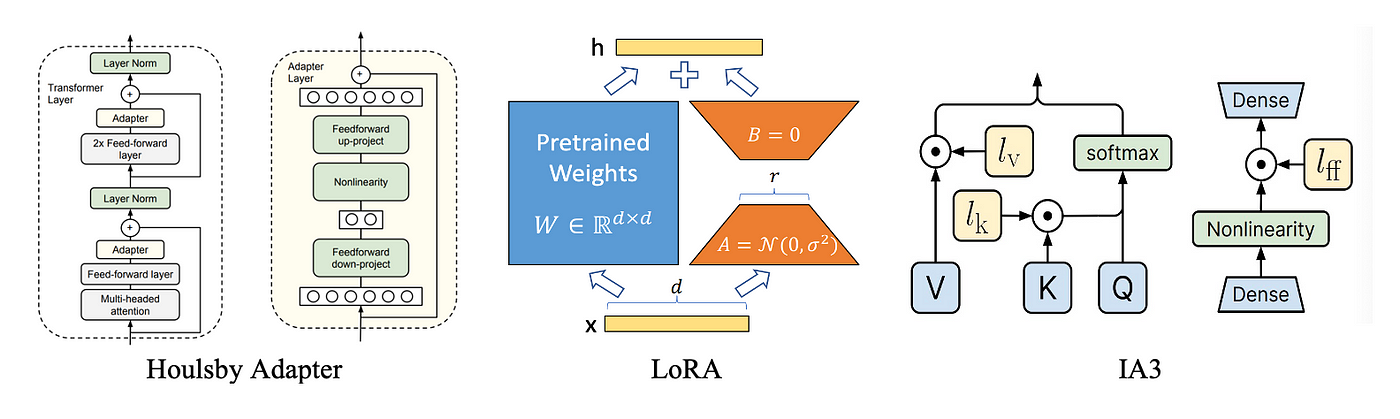

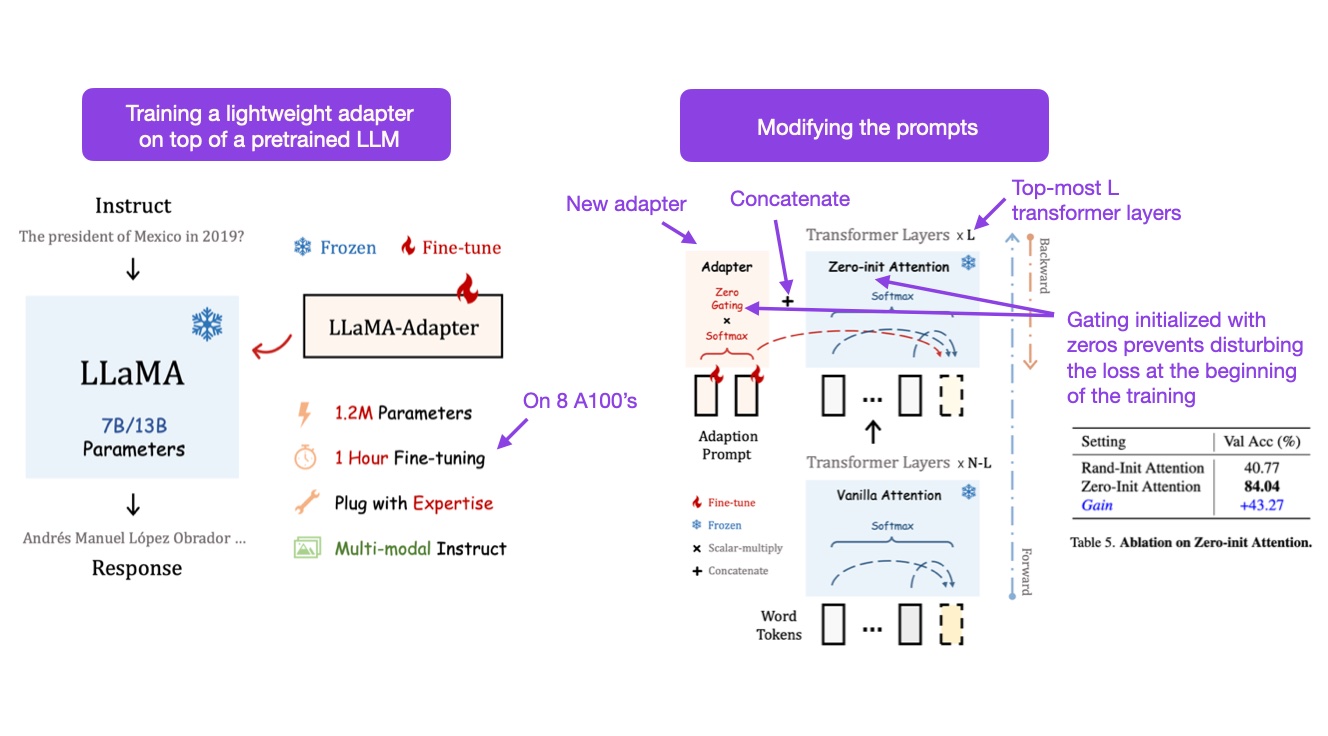

Understanding Parameter-Efficient Finetuning of Large Language Models: From Prefix Tuning to LLaMA-Adapters

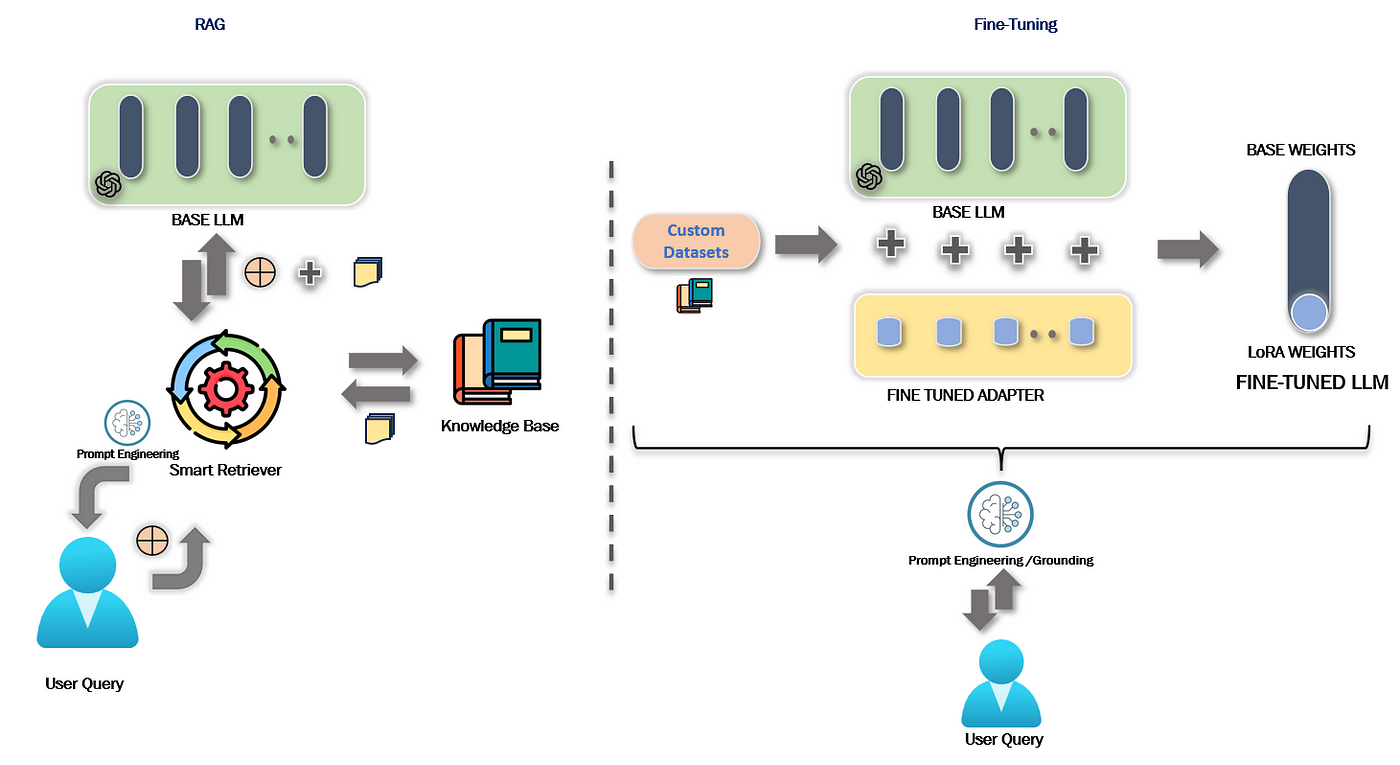

Fine Tuning Open Source Large Language Models (PEFT QLoRA) on Azure Machine Learning | by Keshav Singh | Dev Genius

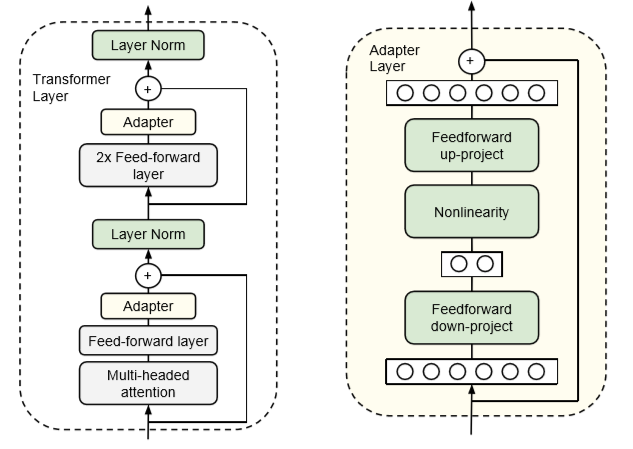

Understanding Parameter-Efficient Finetuning of Large Language Models: From Prefix Tuning to LLaMA-Adapters

Understanding Parameter-Efficient Finetuning of Large Language Models: From Prefix Tuning to LLaMA-Adapters

Understanding Parameter-Efficient Finetuning of Large Language Models: From Prefix Tuning to LLaMA-Adapters

Practical FATE-LLM Task with KubeFATE — A Hands-on Approach | by FATE: Federated Machine Learning Framework | Medium

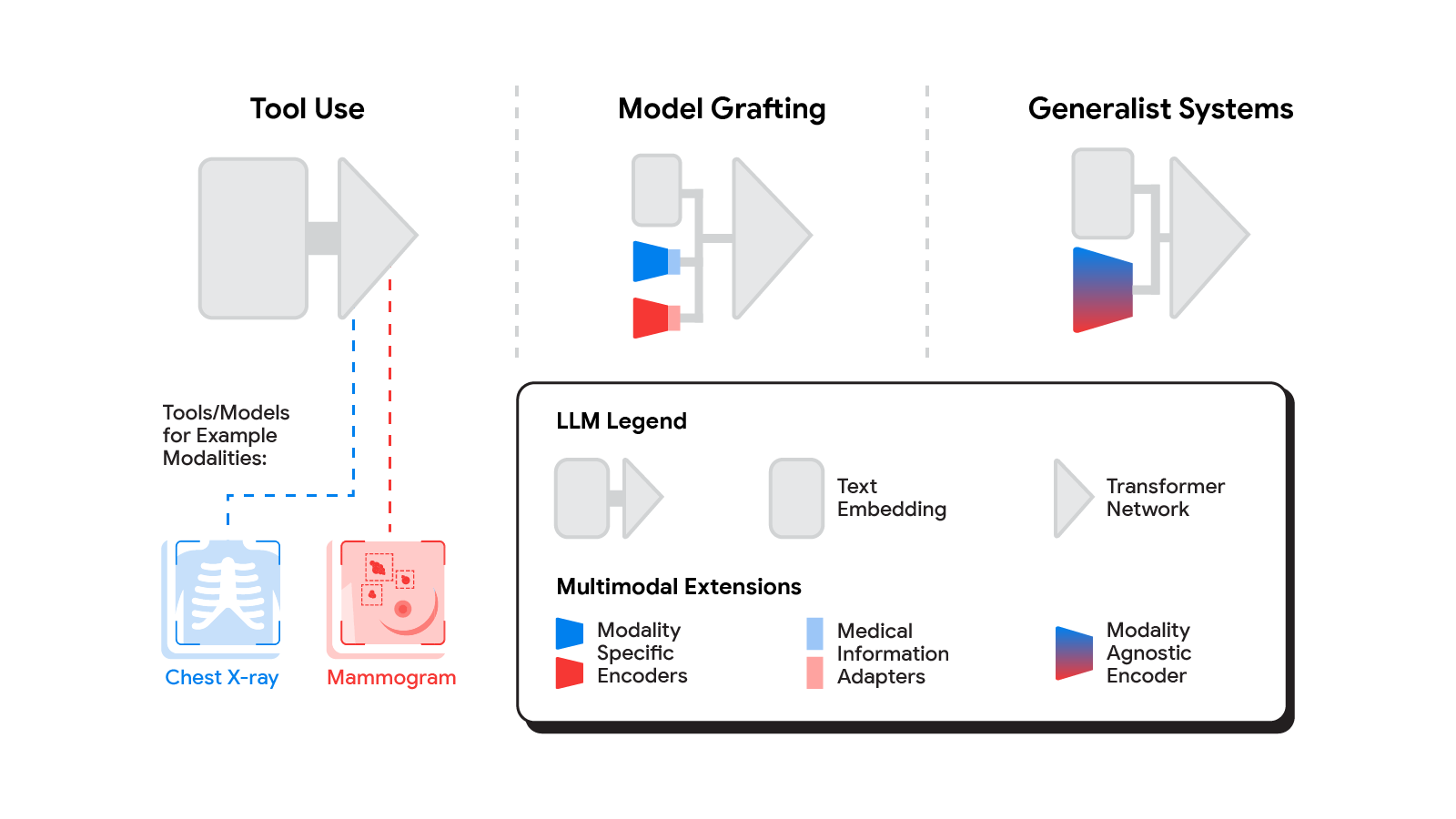

![Research] LLM-CXR: Direct image generation using LLMs without StableDiffusion nor Adapter : r/MachineLearning Research] LLM-CXR: Direct image generation using LLMs without StableDiffusion nor Adapter : r/MachineLearning](https://preview.redd.it/research-llm-cxr-direct-image-generation-using-llms-without-v0-g0wav2gksq3b1.png?width=4000&format=png&auto=webp&s=0efa319d1bd199034e4b686fdd53e2a540df0043)

Research] LLM-CXR: Direct image generation using LLMs without StableDiffusion nor Adapter : r/MachineLearning

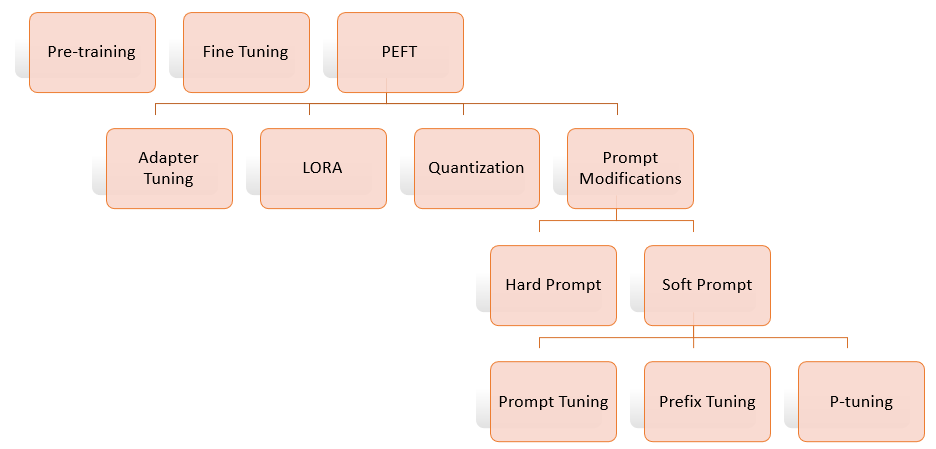

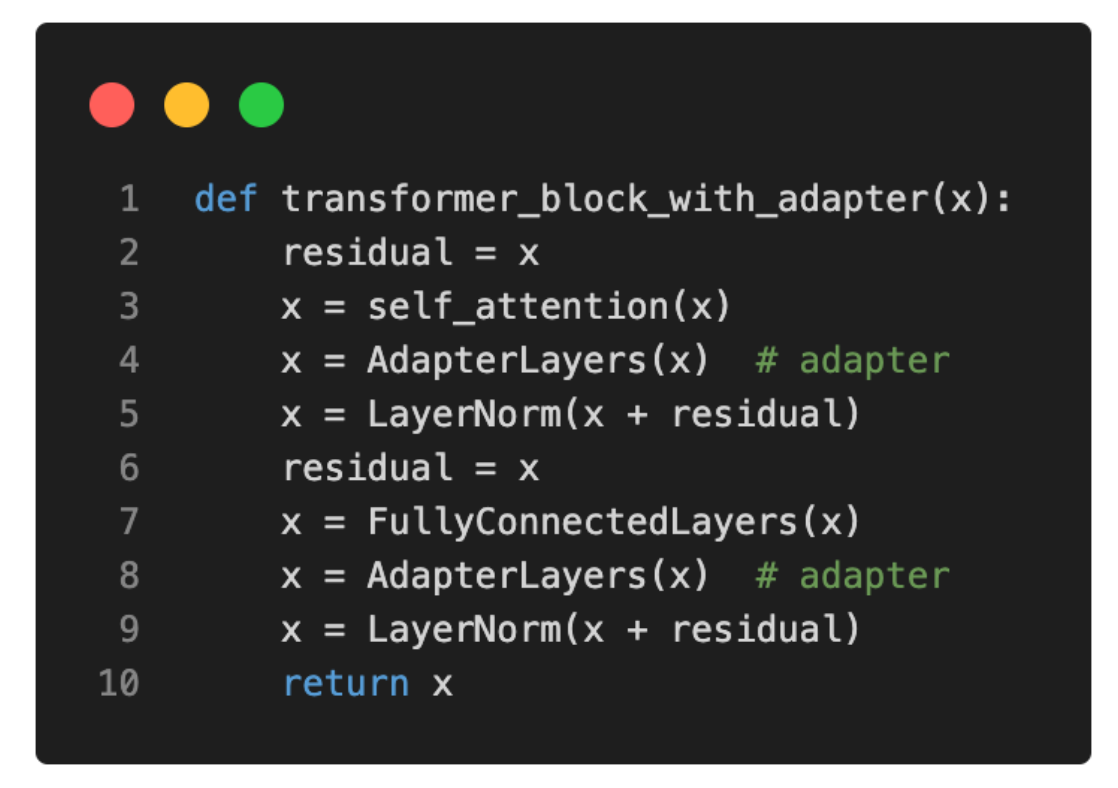

Summary Of Adapter Based Performance Efficient Fine Tuning (PEFT) Techniques For Large Language Models | smashinggradient

Understanding Parameter-Efficient Finetuning of Large Language Models: From Prefix Tuning to LLaMA-Adapters

GitHub - AGI-Edgerunners/LLM-Adapters: Code for our EMNLP 2023 Paper: "LLM- Adapters: An Adapter Family for Parameter-Efficient Fine-Tuning of Large Language Models"

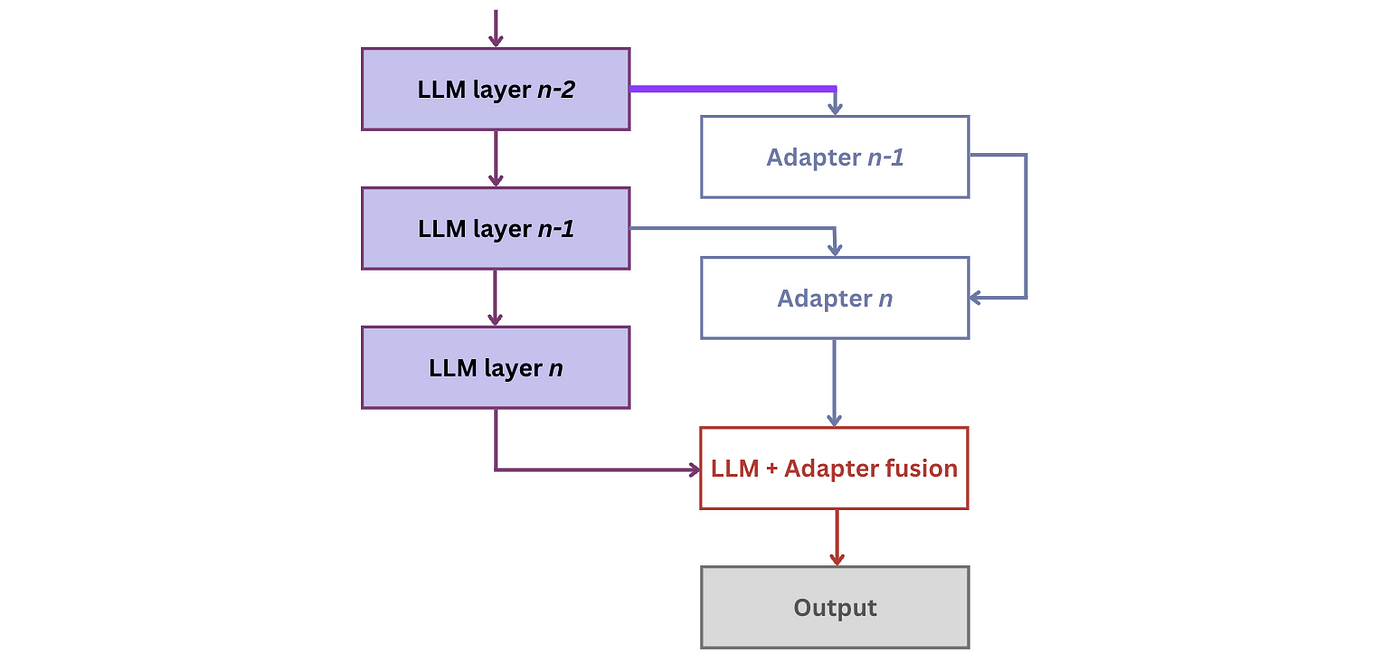

Sebastian Raschka on X: "LLaMA-Adapter: finetuning large language models (LLMs) like LLaMA and matching Alpaca's modeling performance with greater finetuning efficiency Let's have a look at this new paper (https://t.co/uee1oyxMCm) that proposes